I’ve been fascinated by a scene element found in Westworld, a TV show. At its core, the show is a rather classical story about humans losing control of the technology they created. It’s the age old tale of the perils of knowledge, meant to warn us against the dangers of technological progress. Most cultures and religions have similar stories, be it Icarus burning his wings as he flies too close to the sun or the builders of Babel’s tower being punished for getting too close to heaven.

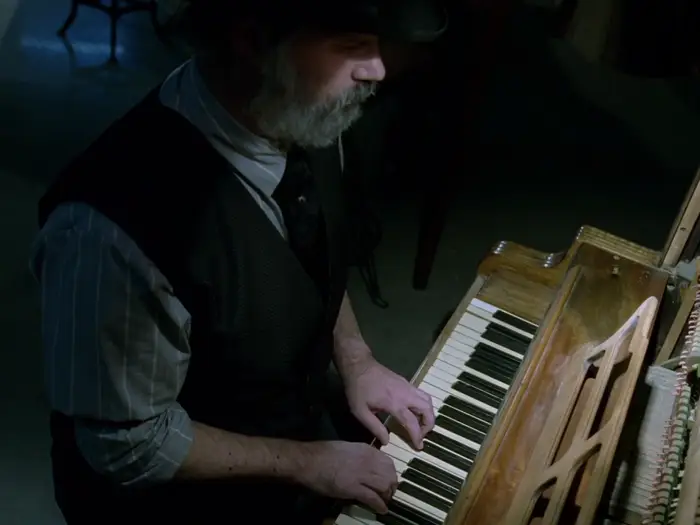

The show is filled with interesting characters, but the one I find most alluring never speaks. It is the pianist we can often observe in the background. In the show lifelike robotic AIs entertain human guests in a pastiche of American westerns, in boisterous saloons and barren deserts. The AIs think they really do live in a western, but the world outside is a high-tech eden. In some saloon scenes, we can see that there is a pianist playing a mechanical piano. Early on, the pianist is seated in front of it, playing it himself. As the AIs gradually gain sentience the pianist gradually gets less involved in operating the mechanical piano, until he is no longer there as the piano plays itself. This mechanical piano is also seen in the generic and in scenes with the AI’s creator.

The pianist in the generic plays the keys

The pianist in the generic plays the keys

The pianist in the generic gives up playing

The pianist in the generic gives up playing

The piano plays itself

The piano plays itself

The saloon’s pianist plays the keys

The saloon’s pianist plays the keys

The saloon’s pianist gives up playing

The saloon’s pianist gives up playing

The saloon’s pianist is now dead, the piano plays itself

The saloon’s pianist is now dead, the piano plays itself

It’s a really interesting piece of background storytelling, which mirrors the ideas of the show’s AI creator. Humans run wild in his “theme park”, introducing randomness in the AI’s programmed storylines which forces them to adapt. He theorizes that this will gradually lead them to develop an “inner voice”, which is akin to consciousness. In the show, this is what happens, and eventually the AIs no longer obey their programmed “inner voice” but instead their own.

I find this idea of something you created taking on a life of its own very appealing. It must be a great feeling to see something you created go in a direction you did not predict. People using a tool you built to create things you could not begin to imagine, communities and cultures arising from platforms you built… Of course in Westworld the results of a creator losing control of his creations takes a predictably dark and gory path, and the AIs proceed to paint the viewer’s screen in fifty shades of red.

I wonder why the myth of losing control of one’s creations is so pervasive in all human cultures. I can’t think of any popular Frankenstein-like tales which end well for the protagonists. Why is it so hard to believe that we can create something with a mind of its own which does not turn on its creator? The common thread of those tales is that those creations are initially pure of thought, almost childish, and that only contact with rotten humans turns them into monsters. I suspect there’s probably a familiar streak of human narcissism in that idea, and that in reality an intelligent AI’s thoughts would seem alien and unpredictable to us.

Widely shared stories like Frankenstein, Icarus, or Westworld shape our societies as they are the basis of a common cultural weave which holds them together. This is why you will see references to Frankenstein in a GM debate, or why AI “ethics” discussion tends to follow predictable patterns of doom mongering. I think we need more of a different kind of stories, in which high-tech creations produce believable, unmitigated good.

I hope one day we will manage to spark a symphony which plays itself, and I for one will enjoy watching its beautiful notes unfold.